Zipwire's evolutionary architecture: latent microservices

28 Jun 2023

28 Jun 2023 by Luke Puplett - Founder

It was all Jeff's fault

It’s now more than 20 years since Jeff Bezos sent his famous “API Mandate” and perhaps 12 years since I read that Amazon’s home page was constructed from something like 100 API calls. Presumably a single API call may go on to make further calls and so on, and that this was with cold caches, but it was shocking to me that it worked this way.

To scale Amazon and pave the way for AWS, Jeff had to break the rapidly growing problem domain down into manageable pieces that could be run as “autonomous, single-threaded teams”, as they’re described by Colin Bryar and Bill Carr in Working Backwards. This meant splitting up their monolithic store codebase into services and communicating over well-documented API calls.

Up until now, Service Oriented Design was a thing, but it had a terrible reputation as being an enterprise thing with enterprise grade Complexity-as-a-Service, products and consultants. SOA was during the era of XML and the Enterprise Message Bus which I understand had its own rules, the mistake supposedly was in making the pipes too smart and presumably the endpoints too dumb.

Microservices, as it came to be known, was heralded as SOA done right, though the original sin with was to take this misnomer to heart and make them too small. Or too big. No one really knew and still, to this day, I’m not sure if anyone knows how micro a microservice is supposed to be. Is it determined by type cohesion? Or by message passing and “chattiness”? Or by the size of the team needed to build and run it? I don’t know and fortunately that’s not the topic of this blog post.

As generally happens in software engineering, time passes, scars accumulate, people get promoted or retire, context is forgotten, cost curves decline and emotion and inertia wane. Eventually it’s socially acceptable not to be a true believer. Thinking we now know better, we move on.

The failure of agile

Randy Shoup once said something along the lines of...

“No one ever heard of the company that started with microservices, because it went bust before shipping anything of value.”

Wait. I've found a photo of him actually saying it.

Today, developers are asking “Why did we ever use microservices?” and then blaming themselves or finding a scapegoat in the self-appointed architects on the project. Product. I mean product. No, let’s me honest, it was a project and that’s partly why you went with microservices. You had to.

The vast majority of us have never worked on a real agile product. We think we know what agile means, but we don’t. I remember being shamed by the surly managers of my first proper software engineering job at a bank in Europe into thinking that what I’d been doing, up until they gave me a chance at being a grown-up developer, was being a cowboy coder. They used source control, sprints, burn down charts, Jira, and had Team City up high on a Plasma TV.

Before this gig I’d been writing helpful little apps at my desk for the 80-or-so people in the IT department around me. I used VB because its all I knew and compiled directly to a network share, dozens of times a day. I tested it myself, or just worked on stuff no one could see because there was no button into it unless it was on my machine. It wasn’t proper programming and I knew it and tried to hide that fact during interviews.

It took me another decade and endless study of the origins of tech startups to realise I’d been duped.

The agile going on in that room in a hot rental office behind the Bank of England was a charlatan; it was a ringer laid in the nest of agile, by the scrum cuckoo. The app was to be launched in a Big Bang event with a marketing campaign, and a follow-up release to fix things and cover off stuff we were late on.

The Waterscrumfall Project begets microservices because the Big Design Up-Front begets the Big Team Up-Front which is way too many pizzas.

That’s it in a nutshell. That’s primarily why software that shouldn’t be microservices, is. If you were truly building an agile solution you’d have begun with only one or two developers hacking on a single codebase, continually delivering value, and going where the winds of user feedback takes them. I got duped so hard that I admit to coming into in genuinely agile products and applying scrum and microservices to them.

Spaghetti agile

In my defence, this particularly large, genuinely agile, product was mission critical, untested, spaghetti. Beyond spaghetti code, this was Gordian Knot code. A hugely complex system that, at the core of its core, was a task runner. This system was so badly written and so untested, that the code that ran the task, never even checked its return value, and no one ever knew. And like the fabled knot, we needed to take a sword to it.

Is this technically mindless form of agile any better than its BDUF antagonist? I mean, look at it. It's barely recognisable as code.

That largely depends on whether the product is a significant income generator. If it is, then the cost of disentangling it should be a no brainer, as in the many rewrites of Twitter, but if it’s a critical line-of-business application that’s not in-of-itself creating revenue, then this kind of development is reckless negligence.

Ravioli agile

Although the waterscrumfall project I was working on was most certainly cuckoo agile, it wasn’t microservices and it wasn’t spaghetti either. It was ravioli cuckoo agile; if we'd delivered the thing to users from a few weeks in and start getting feedback, instead of sandbagging it for the big reveal, it’d have been perfect.

That said, it was sort of microservices. The app was one of the first of its kind in investment banking and trading: a platform for dealing foreign exchange, like IG's platform today (if you know that) but with a few more features for dealing with the traders behind the scenes. It was written in Microsoft Silverlight because it needed a super rich UI and to be delivered in a single click via a web browser to the bank’s clients.

It was a BDUF fat client which interfaced with some services to execute the trades. This meant breaking the codebase down into components that could be worked on by single developers. Most big apps up until then had been Windows fat clients, so there was a lot of experience in how to tame beastly frontends and some well known frameworks to help.

The way it worked was much like event-driven microservices. The main UI app loaded and then that continued to load the various UI components which were given targets in the UI to render into. Each component was separate like a square of ravioli and had knowledge of a small interface that represented its host, through which it would receive the UI object into which it could lay itself out, make API calls and register for messages, insert actions into menus in the host UI, as well as be notified when to shut down.

To the end user, these components seemed to be unified and acted like they knew of each other. A trade made in one windowed component would appear in the blotter in another window, and the menus changed in the main app depending on what was in focus in the windows.

The components only loosely knew of each other. They received data through network services, so actions in one component could have visible reactions in another. Or they could talk locally via message passing in-process; this is how menu buttons triggered actions in components.

Once loaded, the monolithic process served up pixels and responded to clicks, but it could easily have served up hypertext and responded to HTTP requests.

Latent microservices

Zipwire is a monolith. It has many vertical feature areas which communicate either directly, or over an asynchronous message bus. Each vertical has its own storage and never talks directly to the storage of other verticals. The message bus sounds fancy pants but is nothing more than a little class which lives forever in-process and holds a collection of subscription callbacks; it is not an external message queue.

All the verticals register their callback functions with my hand-made message bus in the blink of an eye when the process starts up. Each vertical has a CQRS style commands and queries facade through which large grain work is routed.

Standing up all these vertical pillars and then laying across them, like the roof of the Acropolis, is an ASP.NET website. It injects the commands and queries into the HTTP controller classes, and also into each other. This makes the cross-wiring visible and explicit. It’s needed because some commands or queries need to directly call across to the queries in another vertical to combine data.

Fishy code is more obvious; seeing a command from vertical A in the constructor of a command in vertical B rouses suspicion. Why is code in A mutating stuff in B? B should ideally be watching for events and mutating stuff asynchronously; doing it eventually.

ASP.NET controller classes handle HTTP requests and use either commands or queries as needed to get things done or pull information up and wrangle it for display.

Each vertical has what I unimaginatively call a “service” which observes all the events on the bus and handles just those it might be interested in, reacting to the stuff going on, calling down to commands and queries as needed.

The design goal is to divide the codebase up, prevent types from different feature areas from permeating all code everywhere, and allow the application to be broken up into real microservices if that was ever deemed the right thing to do.

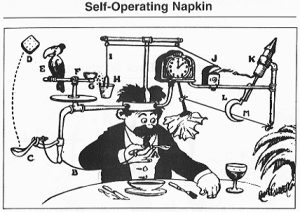

Disentangling code is largely about separating types and libraries from each other's business. Spaghetti code tends to have things happening “all over the place” kind of like a Rube Goldberg machine, and also has all its types mixed up, such that dependencies are criss-crossing “randomly”.

By building in verticals and using events, the event types serve as a customs checkpoint where “you can’t take that thing through here, mate”, or security in an airport where you take a look at your baggage and throw out bottles of liquid etc. That is, you can’t use a type from vertical A in vertical B, you have to transfer the data onto the event type using only strings and ints etc.

The same is true of the commands and queries. These facades enforce the same sort of rules. The query facade might well return types from its domain, but these can be considered and planned for and placed in the right library, probably alongside the query facade interface.

Eventual or not

There’s a simple rule about when to use eventual, asynchronous side-effects or direct, synchronous mutations for a website or web service.

If the response requires the effects, it must be done synchronously, and that depends on the UX. E.g. if the UI is a team membership editor, then the response to the request to add a new member to a team should be the latest view of memberships, including the changes just made.

But if the UI is say, creating a new organisation which happens to have some business logic which configures some default memberships, and the UI doesn’t show these memberships, they’re incidental, then this can be done eventually, i.e. the vertical that does memberships can watch for a new organisation event and then add the memberships or something.

By the time the user visits the memberships UI, they’ll be there. Essentially, the decision is driven by whether the side-effect is impacting stuff in the direct purview of the user, their current UI–if it's in another UI on another page then it can be done eventually.

If you have a realtime UI, where updates are pushed to the client over a socket, then you can design it all to be eventual and push updates to the client.

Evolution

No vertical has ever actually been broken out into a service. This is important because it’s why we practice fire drills; it keeps the fire doors from accumulating boxes, rusting up, and also helps expose logistical realities.

However, I have frequently sat eyes closed thinking about the steps necessary to break a vertical off.

It would start with the need for a real message queue, which can be retrofitted and replaced within the monolith and all event classes double-checked as being serializable on and off the queue. Then some of the commands and queries would need to be made available over the network, not least because the ASP.NET controllers will need to call them over the network to the new process running the broken-off microservice, but also because a command can consume queries facades from foreign verticals, and these calls would no longer be in-process either.

Breaking-up the monolith isn’t the only type of evolution. The most common change is to create a new feature within a vertical or creating a whole new vertical stack. Given that the features aren’t all plugged in to the same data store, how does a feature bootstrap itself?

A tangible example in Zipwire is the business card feature. Timesheet senders, approvers and processors all have a business card on their dashboard showing the company and their client assignment. This appears when they’re added to an assignment and is removed afterwards.

The dashboard is its own vertical and has its own storage. It updates its items based on what it hears on the grapevine, i.e. events. The problem comes when the feature is released after the events have happened. In this case, the person has already been assigned and that event has gone, so the dash won’t see the event and the business card won’t appear.

There are three ways to solve this:

-

It’s not important, let’s allow the card to show up in its own time, on the next assignment.

-

Have the feature in the vertical bootstrap itself by querying foreign verticals to get its assignments and put the card up.

-

Force an event via an engineer-only API.

-

Replay an event.

Number 3 can be tricky if there are other services that’ll respond to it and make changes or produce effects, though the event and your listeners can be designed with to ignore events unless their name or a wildcard is in an “audience” field.

Number 4 is only possible if your listening services know to ignore old events. One way to do this is by storing the event IDs (a nonce) and using an ID that’s semi-sequential.

For example, the ID 2023-W26-2-a76s6c87fg3m begins with the ISO format for the day of the year by week. A service can be written to store the IDs of all the events it has seen into daily buckets, checkpointing and discarding buckets that are a couple days old, then outright rejecting any IDs that would have been in those buckets.

At present Zipwire does none of this clever stuff and so I tend to use number 1 or 2. I might implement the semi-sequential nonce anti-replay design if I need it.

The Sirens of complexity

And that’s it. It’s funny that as I write about doing this, the challenge and wonder of it makes it feel like an appealing thing to do now. And that’s another strong reason we all loved microservices: they were more interesting. When the team is highly experienced and highly technical, and removed from the fun of building stuff for customers, they tend to derive programming pleasure from the challenge of engineering itself, and that tends to lead to complexity.

Conclusion

To recap, then.

-

We used microservices because they were fun, impressive, and could be sold to management as a solution for having something for all 22 developers to do, from week one.

-

Yes you could have just written a well-factored codebase in discrete parcels while maintaining a plan for how you'd scale it.

That's lovely and everything but what is Zipwire?

Zipwire Collect handles document collection for KYC, KYB, AML, RTW and RTR compliance. Used by recruiters, agencies, landlords, accountants, solicitors and anyone needing to gather and verify ID documents.

Zipwire Approve manages contractor timesheets and payments for recruiters, agencies and people ops. Features WhatsApp time tracking, approval workflows and reporting to cut paperwork, not corners.

Zipwire Attest provides self-service identity verification with blockchain attestations for proof of personhood, proof of age, and selective disclosure of passport details and AML results.

For contractors & temps, Zipwire Approve handles time journalling via WhatsApp, and techies can even use the command line. It pings your boss for approval, reducing friction and speeding up payday. Imagine just speaking what you worked on into your phone or car, and a few days later, money arrives. We've done the first part and now we're working on instant pay.

All three solutions aim to streamline workflows and ensure compliance, making work life easier for all parties involved. It's free for small teams, and you pay only for what you use.